Welcome to Big O Notation by the Graphic Girls!

My name is Big O! I am an adorable donut representing Big O itself!This webpage was made by Anastasiya Markova, Maryam Almahashnah, and Zoe Ludena. Any cute drawing you see on this website were drawn by Zoe!

I hope you are as excited as me to learn all about Big O Notation. This web page aims to teach you about the history of Big O notation, why it is relevant to you as a programmer, and how you can calculate it from other algorithms.

Introduction to Big O Explained Video

What is Big O Notation?

Big O notation is a fundamental tool used to analyze the cost of an algorithm. It gives the upper-bound runtime or worst-case complexity of an algorithm. This allows one to classify algorithms depending on their run time or space requirements.

Big O notation was invented by German mathematicians Paul Bachmann, Edmund Landau, and others. It is also called Bachmann-Landau notation or asymptotic notation. The letter represents the input size (this can be the length of a list of a given integer). The letter was chosen by Bachnmann to stand for Ordnung, which means the order of approximation.

Why is Big O Notation Important?

Big O Notation is essential if you want to know how algorithms will scale. For example, if you are designing a big website and have a lot of users the time it takes to handle those requests are important. You need to know how to store all of this data efficiently, so when you write code it does not take a million years to run. Big O Notation gives you a high level idea of which algorithms are fast or slow and the tradeoffs.

Fun fact!

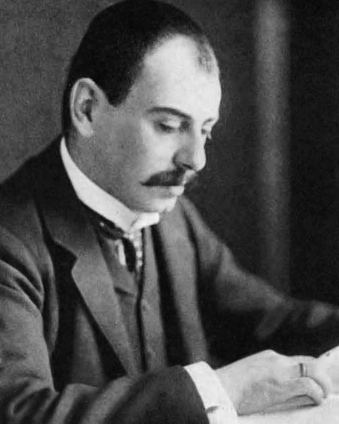

Big O is modeled after Edmund Landau! This picture of Landau is from Wikipedia.

How do you go about calculating Big O?

Big O Notation Rules:

Step 1: Break your algorithm/code into individual operations.

def func(n):

total = 0

for elem in range(n):

total += elem

print(total)

Here, we can break the code into the following operations:

- Initialize the variable

totalto 0 - Iterate through the range of the given value n and add each number to

total - Print the value of

totalafter each addition

Step 2: Calculate the Big O of each operation.

def func(n):

total = 0

for elem in range(n):

total += elem

print(total)

Here, we can outline the time complexity of each line:

- The blue lines compute only once.

- In our case the blue lines all take time because the computer performs just one opration.

- The red lines compute n times.

- Each line inside the for loop takes and is performed n times. So the total time complexity for the for loop is

- Combining the operations inside the for-loop and outside the for-loop, we get the total time complexity of

Step 3: Remove Constants.

Let's Plot our time complexity!

We can now see a linear trend with respect to . Remember that Big O is the worst case time complexity. In this case, the worst case is if n is approaching infinity, and as this happens the constants become less relevant. This allows us to disregard the constants when calculating Big O. You can click the button below to see what happends when we remove the constants. Notice that the first two bars seem to shift downwards, while the rest seem to stay the same height. This is in fact not true; all bars decrease by two, but as approaches infinity constant are less relevant so we can ignore them.

Our Time Complexity:

Step 4: Find the highest order term.

value: 1

Now, remember the official definition of Big O is

if there exists possible constants , such that for all .

- Try checking different time complexities and adjusting the . Can you tell what is Big O of this function?

- You may notice that there are multiple functions that fullfil for all . We want to find the one that has the slowest rate of growth.

- We can usually find a function by looking at the fastest growing term.

- For example if our actual time complexity is described by then there exists a function for some which is the upper bound, since is the fastest growing term.

Constant Time

The easiest and best time complexity!

When the algorithm is not dependent on the input size, it has constant time complexity . i.e., the runtime will always be the same regardless of the input size.

Some common algorithms in constant time are: a stack's push, pop, peek, a queue's enque, deque, peek, a linked list's insertion or deletion, and a hash table's insertion, deletion, and retrievel (when there are no collisions).

Examples

- Assigning a variable is done in constant time.

- Conditional statements are done in constant time.

- Accessing an element inside of a list using an index is in constant time.

Let's look at some code examples of the above!

x = 1 # The time complexity of this line is O(1)!

lst = [1, 2, 3] # and so is this one.

y = 'hello!' # also this one!

# some other examples:

if a > b: # this is O(1) because we are simply comparing a and b

return True # simply returning true also takes O(1)

else:

return False

one = [lst][0] # This is in constant time!

# another example:

lst = [1, 2, 3, 4] # remember, initializing a varable only takes O(1)

i = 0 # O(1)

while i < range(4): # here we are simply comparing a constant value to another, which also takes O(1)

if i == 0:

break

It's amazing when we can work in constant time. Try to use things that are in constant time where you can to reduce run times in the future!

Practice Questions

Select which time complexity best describes the given code.

Problem 1

def function(n):

if (n == 1):

return

for i in range(1, n + 1):

for j in range(1, n + 1):

print("*", end = "")

break

print()

Select Answer From The Following Choices

Sources

We used a variety of sources to choose examples, provide accurate information, and to make our visualizations better.

AndrewBadr. "Time Complexity." Time Complexity - Python Wiki, 1 Jan. 2023

"Big O Notation in Data Structure: An Introduction: Simplilearn." Big O Notation in Data Structures, Simplilearn, 6 Nov. 2023

"Big O Notation." Wikipedia, Wikimedia Foundation, 5 Mar. 2024

Huang, Shen. "What Is Big O Notation Explained: Space and Time Complexity." freeCodeCamp.Org, freeCodeCamp.org, 8 Dec. 2022

Prado, Kelvin Salton do. Understanding Time Complexity with Python Examples, Towards Data Science, 4 Mar. 2019

Rowell, Eric. Know Thy Complexities! Big-O Algorithms, 2016

"Web Safe Color Chart." HTML Color Codes, htmlcolorcodes.com. Accessed 28 Feb. 2024.

OpenAI. "ChatGPT 3.5"